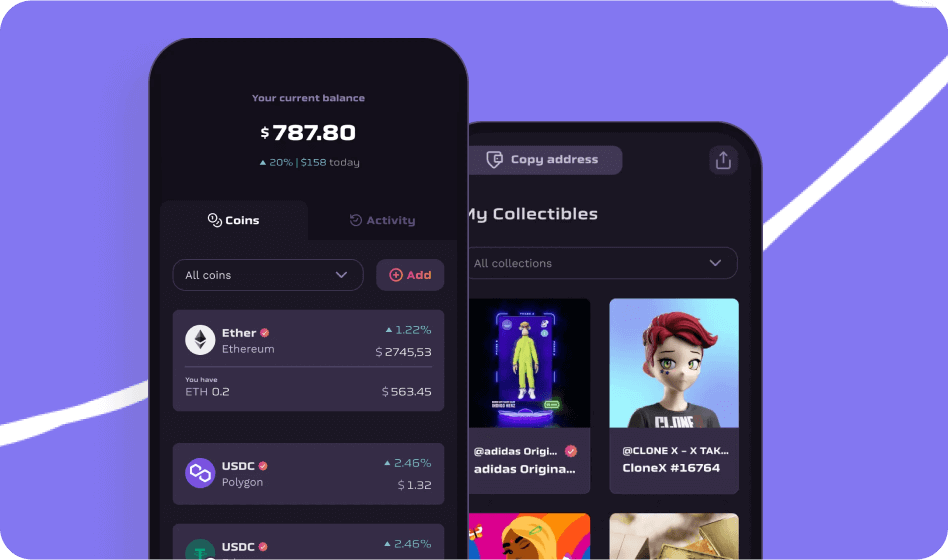

We make complex technology easy to use

What We Do

What Drives Us

Where others see challenges, we see endless possibilities. These challenges spark our creativity and drive our work. That is how our culture was built, and what we believe in.

CollaborationHonestyInnovationResponsibilityEfficiency

Our values are battle-tested